Make better-informed and faster decisions

Having access to hard data from diverse areas of an organization can empower leadership to make more informed decisions and get there more quickly.

Speak to a rep about your business needs

See our product support options

General inquiries and locations

Contact us

Data integration pulls together information from a range of sources and modifies it into a single format. For companies with diverse data repositories across multiple divisions and functions, care must be taken to properly identify and scope the project. A data integration process generally encompasses the following steps:

The organization needs to identify all the sources of data that need to be integrated into the unified solution. This could include databases (on premises and cloud-based), APIs, legacy systems, and spreadsheets.

Using appropriate tools or processes, data is extracted from the identified sources. This may involve pulling files from remote locations, database queries or API-driven data retrieval.

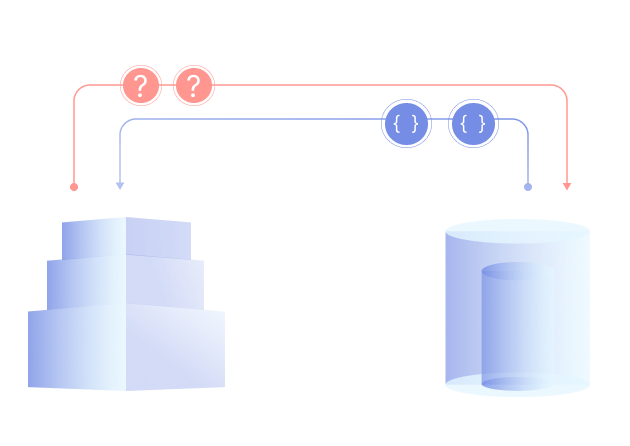

While different data sources may contain similar information, they may use different terminologies, or structures of code to represent it. To achieve the desired data alignment during the integration process, developers create and implement a mapping schema to define how data elements from different systems correspond to one another.

Data validation is performed, which involves reviewing the data to identify any errors or data integrity issues to demonstrate accuracy and quality. QA processes are implemented to maintain reliability and accuracy in the unified data.

The extracted data is merged into a common format for compatibility and consistency. This may include additional levels of data cleansing, enrichment, and normalization.

In this stage, the transformed data is loaded into its desired location, such as a data warehouse, for further analysis and reporting. Depending on the requirements, the data loading process might be conducted via batch loading or real-time loading.

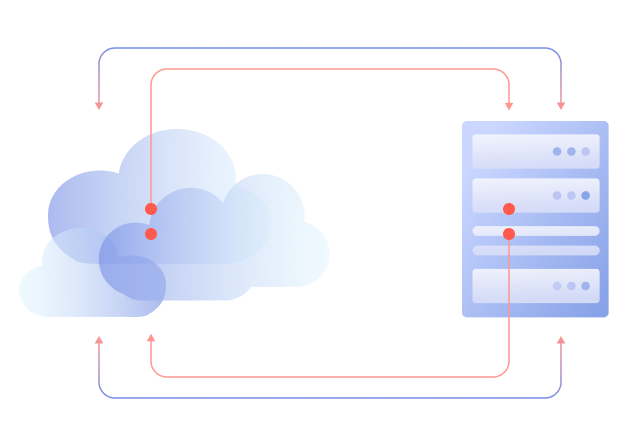

Data synchronization keeps integrated, processed data up to date and current. This may be accomplished via periodic updates, such as overnight or during off-peak hours, or by way of real-time synchronization if immediate integration of new data is required.

Industries such as healthcare and finance face additional legal scrutiny related to privacy and security. Data governance practices ensure that sensitive data is integrated and handled in compliance with regulatory and privacy requirements.

Users of integrated data may need additional ways to understand the data’s source, meaning and context more easily. This is greatly enhanced by access to metadata, which provides information about the integrated data and enhances its discoverability and usability.

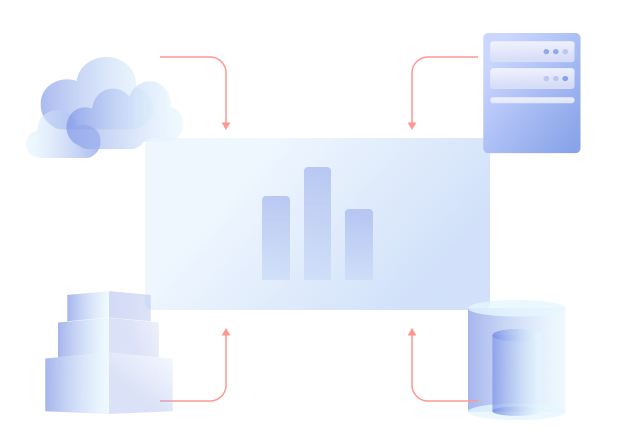

The integrated data sets can be accessed and analyzed via a range of tools, including reporting solutions, BI software, and specialized analytics platforms. Analysts and decision makers will be empowered to capture insights for better business strategies and faster decision making. By integrating data from a range of sources, cleansing it, putting it into a common format and keeping it current, companies can have a unique tool in their arsenal to make better decisions faster and support their DataOps initiatives.

There are several different kinds of data integration, each with its own advantages and drawbacks.

The classic data processing approach, ETL is a bulk or batch data movement approach that extracts data from its source to some sort of midpoint staging area, where it is transformed or processed into the desired format, and then loaded into a data warehouse for analysis.

This tried-and-true method remains in use for many data processing systems, particularly in scenarios where data quality and consistency are a leading requirement. However, this approach doesn’t lend itself well to real-time data processing needs where speed and scalability are paramount.

This method is an evolution of ETL that leverages the processing capabilities of modern data storage systems. After data is extracted, it is loaded directly into a data warehouse, where it is then transformed into the system’s common format. Due to its speed and flexibility, ELT is a preferred method in scenarios where real-time processing and scalability are required.

Streaming data methods capture and process data in real-time as it is generated from source systems, integrating it directly into a data warehouse or data lake for immediate use. Modern technology has introduced various real-time data integration methods, such as Change Data Capture (CDC), Application Integration (API), Data Virtualization, and Federated Data Integration, each offering unique advantages for organizations seeking up-to-the-minute insights.

Change Data Capture (CDC) tracks and replicates changes—such as inserts, updates, and deletes—in the source database to a target repository, enabling real-time data synchronization and integration with ETL or other tools. Log-based CDC is generally preferred for high-volume environments because it captures changes directly from transaction logs, minimizing performance impact on the database. Trigger-based CDC, while effective for real-time data capture, can impose a higher load on the database due to the overhead of triggers.

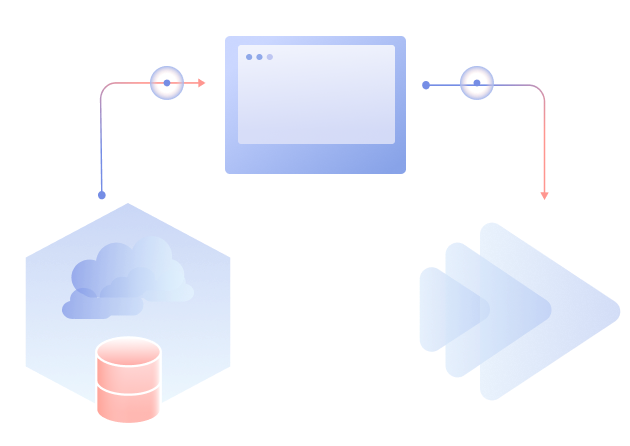

Application Integration using APIs allows different software applications to communicate and share data in real-time, facilitating seamless interactions and data flow between disparate systems. APIs enable automation and real-time data exchange across platforms, playing a critical role in various scenarios such as connecting e-commerce sites to payment gateways or enabling mobile apps to interact with social media platforms.

Data Virtualization creates a virtual layer that provides users with a unified view of data from multiple sources in real-time, without physically moving the data. This method allows organizations to access and manage data from different sources, such as data warehouses, lakes, and cloud services, as if it were in a single, centralized repository. Unlike data federation, data virtualization does not require a single unified data model and can handle updates to source systems directly, preserving data quality and minimizing errors.

Federated Data Integration enables data to remain in its original source systems, retrieving it in real-time via on-demand queries. This approach is particularly useful for organizations with complex data ecosystems, as it allows for seamless data access without the need for extensive data movement or transformation. It minimizes data duplication and is ideal for scenarios where data consistency and real-time access are more critical than performance.

Data integration is the practice of unifying or combining data from disparate areas within an organization into a single view.

Whether it’s sales, manufacturing, marketing or supply chain, modern businesses have access to more data than ever. But when that data is housed in a range of systems, saved in incompatible formats, and owned by different parts of the business, bringing it together so that company leadership can make sense of it can seem like an insurmountable challenge.

Data integration works to overcome that challenge by using the right set of practices and technologies to combine and leverage all types of data. By bringing together data from a range of sources, a complete, up-to-date, and accurate dataset can emerge to help the organization make data-driven decisions more quickly.

Successful implementation of a data integration plan faces several major challenges, many of which go well beyond budgetary constraints. Because data integration sits at the overlap of people and technologies, an organization has a lot to consider when embarking on a major data integration initiative.