Sagen Sie uns, wie wir helfen können

Vertrieb & Preise

Speak to a rep about your business needs

Hilfe & Support

See our product support options

Allgemeine Anfragen und Standorte

KontaktWir verwenden KI-Tools, um unsere Inhalte in mehreren Sprachen bereitzustellen. Da diese Übersetzungen automatisiert sind, kann es zu Abweichungen zwischen der englischen und der übersetzten Version kommen. Die englische Version dieser Inhalte ist die offizielle Version. Kontaktieren Sie BMC, um mit einem Experten zu sprechen, der Ihre Fragen beantworten kann.

Weiterleitung…

Wählen Sie Ihre Sprachen

Basierend auf den Einstellungen Ihres Browsers haben wir festgestellt, dass Sie diese Website möglicherweise lieber in einer anderen Sprache ansehen möchten.

Wir verwenden KI-Tools, um unsere Inhalte in mehreren Sprachen bereitzustellen. Da diese Übersetzungen automatisiert sind, kann es zu Abweichungen zwischen der englischen und der übersetzten Version kommen. Die englische Version dieser Inhalte ist die offizielle Version. Kontaktieren Sie BMC, um mit einem Experten zu sprechen, der Ihre Fragen beantworten kann.

Was ist ETL (Extract, Transform, Load)?

ETL ist ein grundlegender Prozess zur Datenverwaltung in modernen Organisationen. Erfahren Sie, was ETL ist, wie es funktioniert, welche Vorteile es hat und wie es im Vergleich zu verwandten Prozessen wie ELT und Reverse ETL abschneidet.

ETL-Definition

ETL (Extract, Transform, Load) ist ein Prozess, der Rohdaten aus verschiedenen Quellen extrahiert, in ein brauchbares Format umwandelt und in ein Zielsystem wie ein Data Warehouse lädt.

- Extrahieren: Daten werden aus Quellen wie Datenbanken, Anwendungen oder Flachdateien gesammelt.

- Transformieren: Die Daten werden bereinigt, neu formatiert und validiert, um die Qualität sicherzustellen.

- Laden: Die transformierten Daten werden in einem zentralen Repository gespeichert, bereit zur Analyse und Entscheidungsfindung.

Warum benötigen Organisationen ETL?

Organisationen verlassen sich auf ETL, um eine einzige Wahrheitsquelle zu schaffen und sicherzustellen, dass ihre Daten sauber, zugänglich und umsetzbar sind. Rohdaten sind oft unstrukturiert, inkonsistent oder unvollständig, was sie für effektive Entscheidungen unbrauchbar macht. ETL-Prozesse lösen diese Herausforderungen, indem sie Daten in ein zuverlässiges, zentralisiertes Format konsolidieren und verfeinern.

Verbesserte Datenqualität

ETL verarbeitet Rohdaten bereinigt, validiert und bereichert sie, um Duplikate zu entfernen, Inkonsistenzen zu beheben und Formate zu standardisieren. Dies stellt sicher, dass Unternehmen vertrauenswürdige und genaue Daten analysieren.

Zentralisierter Datenzugriff

Durch die Konsolidierung von Daten aus verschiedenen Systemen an einem einzigen Ort ermöglicht ETL Organisationen, eine "einzige Wahrheitsquelle" zu schaffen. Dies beseitigt Silos und ermöglicht es den Abteilungen, auf konsistente, aktuelle Informationen zuzugreifen.

Verbesserte Entscheidungsfindung

Genaue, einheitliche Daten befähigen Führungskräfte, Trends zu analysieren, Erkenntnisse zu gewinnen und fundierte Entscheidungen zu treffen. ETL stellt sicher, dass Unternehmen mit den bestmöglichen verfügbaren Informationen zusammenarbeiten, um die Strategie voranzutreiben.

Skalierbarkeit und Automatisierung

Moderne ETL-Prozesse automatisieren sich wiederholende Aufgaben und straffen Datenpipelines, sodass Organisationen ihre Datenverwaltung ohne übermäßigen manuellen Aufwand skalieren können. Dies wird unerlässlich, wenn Unternehmen wachsen, um wachsende Datenmengen effizient zu verwalten.

Um den vollen Wert von ETL zu nutzen, verwenden Organisationen häufig DataOps-Methoden, die sicherstellen, dass Daten-Workflows automatisiert, zuverlässig und auf die Geschäftsanforderungen abgestimmt sind.

Erfahren Sie mehr darüber, wie DataOps ETL-Arbeitsabläufe verbessert

ETL-Prozess

Der ETL-Prozess ist die Grundlage eines effektiven Datenmanagements. Dabei werden die Daten durch drei Schlüsselphasen geführt, um sicherzustellen, dass sie sauber, strukturiert und analysebereit sind.

Auszug

Der erste Schritt besteht darin, Daten aus verschiedenen Quellen zu sammeln, wie zum Beispiel:

-

Datenbanken (z. B. MySQL, Oracle)

-

APIs und Webdienste

-

Unternehmensanwendungen (z. B. CRM, ERP-Systeme)

-

Flachdateien, Tabellenkalkulationen und externe Datenfeeds

Transformation

Nach der Extraktion werden die Daten bereinigt, standardisiert und angereichert, um konsistent und für die Analyse nützlich zu sein. Häufige Transformationsaufgaben sind:

-

Entfernen von Duplikaten und Behebung von Inkonsistenzen

-

Datenaggregation zur Ausrichtung von Formaten und Strukturen

-

Validierung auf Genauigkeit und Vollständigkeit

-

Anreicherung von Datensätzen durch Zusammenführung mit externen oder komplementären Datenquellen

Last

Schließlich werden die transformierten Daten in ein Zielsystem geladen, wie zum Beispiel:

-

Data Warehouses (z. B. Snowflake, Redshift) für Business Intelligence

-

Datenbanken für weitere Analysen oder betriebliche Nutzung

-

Datenseen zur Speicherung und zukünftigen Erkundung

Der ETL-Prozess optimiert Datenabläufe und erleichtert es Organisationen, Daten für strategische Entscheidungen zu analysieren und zu nutzen. Ohne ETL würden Rohdaten fragmentiert, inkonsistent und schwer zu nutzen bleiben, was den Wert einschränkt, den Organisationen aus ihren Informationen ziehen können.

Für Organisationen, die ETL-Prozesse optimieren und die Datenbereitstellung über Teams hinweg verbessern möchten, bietet DataOps einen Rahmen zur Optimierung von Arbeitsabläufen und zur Erreichung von End-to-End-Transparenz.

Optimieren Sie Ihren Datenfluss mit ETL

Was ist ein ETL-Beispiel?

ETL wird branchenübergreifend weit verbreitet verwendet. Hier ist ein praktisches Beispiel für den ETL-Prozess:

- Extrakt: Ein Unternehmen zieht Kundentransaktionsdaten von seiner E-Commerce-Plattform, einem CRM-System und Flat Files ab.

- Transformieren: Doppelte Datensätze werden entfernt, Produktkategorien standardisiert und Kundenregionen markiert.

- Laden: Die bereinigten und formatierten Daten werden in ein Cloud-Datawarehouse wie Snowflake geladen, um eine Echtzeit-Geschäftsanalyse durchzuführen.

Durch die Kombination unterschiedlicher Daten erhalten Unternehmen umsetzbare Einblicke in Kundenkauftrends.

Was ist ETL in SQL?

ETL in SQL bezeichnet die Verwendung von SQL-Skripten (Structured Query Language) zur Ausführung von Extract, Transform, Load-Prozessen innerhalb relationaler Datenbanken. SQL ist ein leistungsstarkes Werkzeug zur Verwaltung von Datenworkflows, da es strukturierte Datensätze effizient abfragen, transformieren und organisieren kann.

Wie SQL in ETL verwendet wird:

- Extrahieren: Verwenden Sie SQL-Abfragen, um Daten aus mehreren Tabellen, Datenbanken oder Systemen zu ziehen.

- Transformieren: Verwenden Sie Operationen wie Joins, Aggregations, Filterung und Datenbereinigung, um die Rohdaten in ein brauchbares Format zu verwandeln.

- Laden: Einfügen oder aktualisieren Sie die transformierten Daten in Berichtstabellen, Data Warehouses oder andere Zielsysteme zur Analyse.

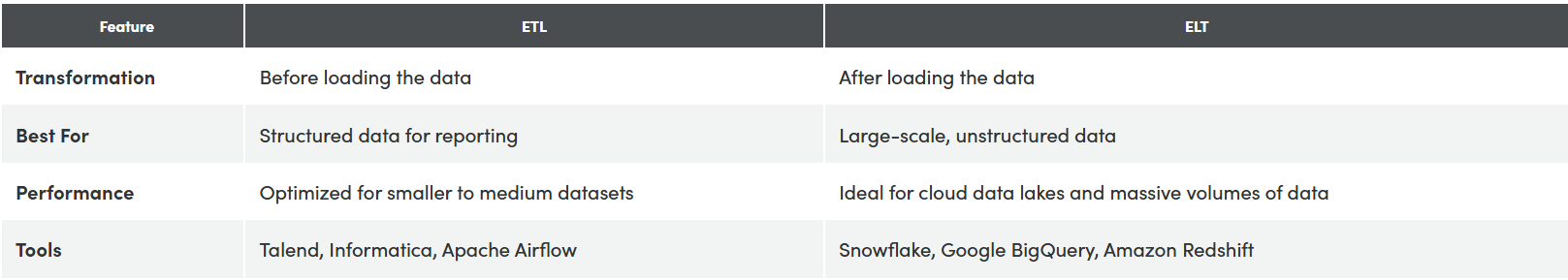

ETL vs ELT

Während ETL (Extract, Transform, Load) und ELT (Extract, Load, Transform) ähnliche Prozesse sind, unterscheiden sie sich jedoch in der Reihenfolge der Operationen und ihren idealen Anwendungsfällen:

- ETL: Die Daten werden vor dem Laden ins Zielsystem transformiert. Am besten für strukturierte Daten und traditionelle Berichterstattung.

- ELT: Die Daten werden zuerst geladen und danach transformiert. Ideal für große, unstrukturierte Datensätze und moderne Cloud-Plattformen.

ETL eignet sich traditionell für On-Premises-Systeme und Szenarien, in denen Datenqualität und -struktur vor der Analyse entscheidend sind. ELT hingegen glänzt in cloudbasierten Umgebungen, in denen rohe, unstrukturierte Daten flexibel und im großen Maßstab transformiert werden können.

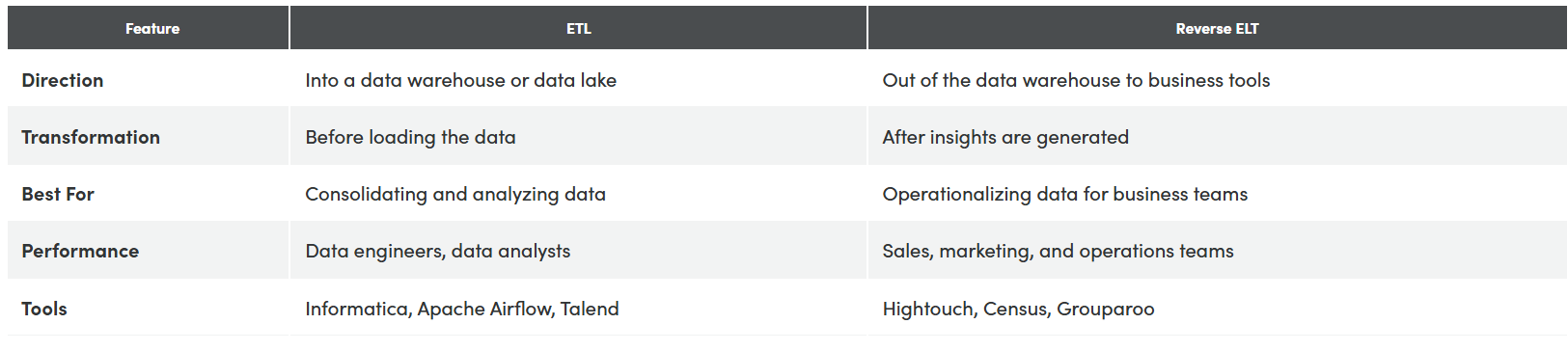

ETL vs. Reverse ETL

Während ETL und Reverse ETL beide die Datenübertragung beinhalten, arbeiten sie in entgegengesetzte Richtungen und erfüllen innerhalb der Datenpipeline unterschiedliche Zwecke:

- ETL: Überträgt Daten aus verschiedenen Quellen in ein zentrales Data Warehouse oder Data Lake zur Speicherung und Analyse.

- Reverse ETL: Transferiert verarbeitete Erkenntnisse aus dem Data Warehouse zurück in die operativen Systeme, sodass Geschäftsteams auf die Daten reagieren können.

ETL erstellt saubere, strukturierte Daten, die Analysen und Berichterstattung antreiben. Reverse ETL wandelt diese Erkenntnisse in umsetzbare Ergebnisse für Geschäftssysteme an vorderster Front um und schließt so den Kreislauf zwischen Datenanalyse und operativer Ausführung. Gemeinsam schließen ETL und Reverse ETL die Lücke zwischen Datenmanagement und Datenaktivierung, helfen Organisationen, datenbasierte Entscheidungen zu treffen und greifbare Ergebnisse zu erzielen.

Sowohl ETL als auch Reverse ETL profitieren von einer starken DataOps-Grundlage , die hilft, die Datenbewegung in der gesamten Pipeline zu automatisieren, zu überwachen und zu verbessern, um umsetzbare Erkenntnisse zu liefern.

BMC-Produkte, die ETL verwenden

Control-M SaaS

Ermöglicht nahtlose ETL-Workflow-Orchestrierung durch Automatisierung von Datenextraktion, -transformation und -ladevorgängen in komplexen IT-Umgebungen.

BMC TrueSight

Unterstützt ETL-Extraktion durch das Sammeln und Überwachen verschiedener Dateneingaben (Protokolle, Ereignisse und Metriken) in der gesamten IT-Infrastruktur für umfassende Analysen.

Nehmen Sie Kontakt zu einem Experten auf.

Danke!

Einer unserer Spezialisten wird sich in Kürze mit Ihnen in Verbindung setzen.